SK telecom members who have released open source software can register and modify the project introduction on this page by referring to the following guide.

This is the multi-page printable view of this section. Click here to print.

Open Source Projects

- 1: A.X LLM Series

- 2: KoBERT

- 3: KoGPT2

- 4: KoBART

- 5: onot

- 6: SKT Passkey

1 - A.X LLM Series

A.X LLM is a series of Korean-specialized large language models independently developed by SK Telecom. The A.X 3.1 and A.X 4.0 series are publicly available as open source and can be freely used for academic research and commercial purposes.

Project Information

- Developer: SK Telecom

- License: Apache-2.0

- GitHub:

Key Features

A.X K1 Series

- 519B Sovereign Model: The model with the largest parameter scale in Korea, released in January 2026.

- National AI Foundation: Korea’s flagship AI developed through a project led by the Ministry of Science and ICT.

- Superior Performance: Built on a proprietary architecture trained on massive-scale Korean datasets.

A.X 4.0 Series

- 72B Standard Model: Optimized for large-scale Korean language processing

- 7B Light Model: Efficient lightweight model

- Korean token efficiency: ~33% improvement over GPT-4o

- Real-world deployment: Used in SK Telecom’s A. call summary service

A.X 3.1 Series

- 34B Standard Model: Independently developed sovereign AI model

- Light Model: Lightweight version

- Significantly enhanced coding and mathematical reasoning capabilities

- KMMLU benchmark: 69.20 points (~88% of A.X 4.0 performance)

A.X 4.0-VL-Light

- Vision-Language model: Integrated image and text processing

- Multimodal AI: Capable of understanding and analyzing visual information

Technical Achievements

Korean Language Processing Capabilities

- Excellent performance on KMMLU (Korean Massive Multitask Language Understanding)

- Specialized in Korean conversation, document understanding, and summarization

- Optimized for Korean business environments

Model Architecture

- A.X 3 series: Sovereign AI developed from scratch

- A.X 4 series: Open-source models enhanced with CPT (Continual Pre-Training) using large-scale Korean data

Use Cases

SK Telecom Internal Services

- A. call summary service (since May 2025)

- Customer service chatbots

- Internal document analysis and search

Potential Applications

- Korean conversational AI services

- Text generation and summarization

- Translation and sentiment analysis

- Code generation and mathematical problem solving

- Korean content creation

Benchmark Performance

| Model | Parameters | KMMLU Score | Features |

|---|---|---|---|

| A.X 4.0 Standard | 72B | 78.3 | Highest performance |

| A.X 3.1 Standard | 34B | 69.2 | Independently developed |

| A.X 4.0 Light | 7B | - | Efficiency |

| A.X 3.1 Light | - | - | Lightweight |

Using on Hugging Face

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load A.X 4.0 Standard model

model_name = "SKT-AI/A.X-4.0-Standard"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Generate text

input_text = "The advancement of Korean language models"

inputs = tokenizer(input_text, return_tensors="pt")

outputs = model.generate(inputs, max_length=100)

print(tokenizer.decode(outputs[0]))

Resources

- Hugging Face: SKT-AI Organization

- GitHub: SKT-AI

- Official News: SK Telecom Newsroom

- Contact: a.x@sk.com

2 - KoBERT

KoBERT is a Korean-specialized BERT model developed by SK Telecom to overcome the limitations of Google’s publicly released BERT language model in processing Korean.

Project Information

- Developer: SK Telecom T-Brain (formerly SKT AI Center)

- License: Apache License 2.0

- GitHub: https://github.com/SKTBrain/KoBERT

Key Features

1. Korean Language Optimization

- Trained on millions of Korean sentences collected from Wikipedia and news sources

- Large-scale Korean language corpus utilization

- Reflects irregular Korean language variation characteristics

2. Efficient Tokenization

- Data-driven tokenization technique

- 27% fewer tokens with over 2.6% performance improvement compared to existing methods

- Subword segmentation tailored to Korean language characteristics

3. Distributed Learning Technology

- Ring-reduce based distributed learning technique

- Fast training of over a billion sentences across multiple machines

- Efficient processing of large-scale data

4. Multi-framework Support

- PyTorch

- TensorFlow

- ONNX

- MXNet

Applications

SK Telecom Internal Usage

- Call center chatbots - Improving customer service efficiency

- AI legal/patent search service - Document search and analysis

- Machine Reading Comprehension (MRC) - Extracting accurate answers from marketing materials

- Context-based document vector generation - Similar document recommendations (patent applications)

General Use Cases

- Sentiment Analysis

- Named Entity Recognition (NER)

- Text Classification

- Question Answering Systems

- Sentence Similarity Measurement

- Text Embedding Generation

Installation and Usage

Installation

pip install kobert-transformers

pip install transformers

Basic Usage

from kobert_transformers import get_tokenizer

from transformers import BertModel

# Load tokenizer and model

tokenizer = get_tokenizer()

model = BertModel.from_pretrained('skt/kobert-base-v1')

# Tokenize and generate embeddings

text = "Korean natural language processing is fascinating"

inputs = tokenizer(text, return_tensors='pt')

outputs = model(inputs)

# Extract sentence embedding

sentence_embedding = outputs.last_hidden_state[:, 0, :].squeeze()

print(sentence_embedding.shape) # torch.Size([768])

PyTorch Example

import torch

from kobert_transformers import get_kobert_model, get_tokenizer

# Load model and tokenizer

tokenizer = get_tokenizer()

model = get_kobert_model()

# Process text

text = "KoBERT is specialized in Korean language understanding."

encoded = tokenizer.encode_plus(

text,

add_special_tokens=True,

max_length=128,

padding='max_length',

return_attention_mask=True,

return_tensors='pt'

)

# Model inference

with torch.no_grad():

outputs = model(

input_ids=encoded['input_ids'],

attention_mask=encoded['attention_mask']

)

pooled_output = outputs[1] # [CLS] token output

print(pooled_output.shape) # torch.Size([1, 768])

Performance Benchmarks

| Task | Dataset | KoBERT Score | Baseline |

|---|---|---|---|

| Sentiment Analysis | NSMC | 89.63% | 87.42% |

| NER | Korean NER | 86.11% | 84.13% |

| Sentence Similarity | KorSTS | 81.59% | 77.92% |

| Question Answering | KorQuAD 1.0 | 52.81 (EM) | 48.42 |

Model Specifications

- Architecture: BERT-base

- Vocabulary Size: 8,002

- Hidden Size: 768

- Number of Layers: 12

- Number of Attention Heads: 12

- Intermediate Size: 3,072

- Max Sequence Length: 512

Community and Support

Technical Support

- GitHub Issues: https://github.com/SKTBrain/KoBERT/issues

- Active community contributions

- Continuous model updates

Related Projects

Using on Hugging Face

from transformers import AutoModel, AutoTokenizer

# Load directly from Hugging Face Hub

model_name = "skt/kobert-base-v1"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModel.from_pretrained(model_name)

# Inference

text = "KoBERT is the standard for Korean natural language processing"

inputs = tokenizer(text, return_tensors="pt")

outputs = model(inputs)

License

Apache License 2.0 - Commercial use allowed

Resources

- GitHub: https://github.com/SKTBrain/KoBERT

- Hugging Face: skt/kobert-base-v1

- Documentation: GitHub README

- Issues: GitHub Issues

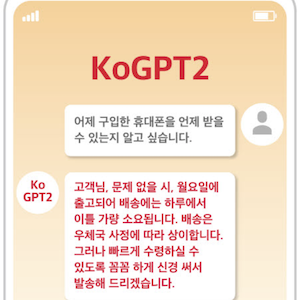

3 - KoGPT2

KoGPT2 is an open-source based GPT-2 model trained on Korean language. By optimizing OpenAI’s GPT-2 architecture for Korean, it can be used in various applications requiring Korean language understanding such as text generation, sentence completion, and chatbots.

Project Information

- Developer: SK Telecom

- Release Date: 2020 (Korea’s first open-source Korean GPT-2)

- License: CC-BY-NC-ND 4.0 (Modification and redistribution allowed for non-commercial use)

- GitHub: https://github.com/SKT-AI/KoGPT2

Key Features

1. Korean Text Generation

- Natural Korean sentence generation

- Context-aware sentence completion

- Support for creative writing

2. Diverse Applications

- Chatbot building: Conversational AI services

- Text sentiment prediction: Emotion analysis

- Response generation: Generating answers to questions

- Sentence completion: Context-based text completion

- Storytelling: Creative writing support

3. Developer-Friendly

- Support for various frameworks (PyTorch, ONNX)

- Easy installation and usage

- Abundant example code provided

Installation and Usage

Installation

pip install kogpt2-transformers

Basic Text Generation

import torch

from transformers import GPT2LMHeadModel

from kogpt2_transformers import get_kogpt2_tokenizer

# Load model and tokenizer

tokenizer = get_kogpt2_tokenizer()

model = GPT2LMHeadModel.from_pretrained('skt/kogpt2-base-v2')

# Text generation

text = "The future of artificial intelligence is"

input_ids = tokenizer.encode(text, return_tensors='pt')

# Set generation parameters

gen_ids = model.generate(

input_ids,

max_length=128,

repetition_penalty=2.0,

pad_token_id=tokenizer.pad_token_id,

eos_token_id=tokenizer.eos_token_id,

bos_token_id=tokenizer.bos_token_id,

use_cache=True

)

# Decode results

generated = tokenizer.decode(gen_ids[0])

print(generated)

Using Hugging Face Transformers

from transformers import pipeline

# Text generation pipeline

generator = pipeline(

'text-generation',

model='skt/kogpt2-base-v2',

tokenizer='skt/kogpt2-base-v2'

)

# Generate text

prompt = "Korean natural language processing technology"

result = generator(

prompt,

max_length=100,

num_return_sequences=3,

temperature=0.8

)

for i, text in enumerate(result):

print(f"Result {i+1}: {text['generated_text']}")

Sentiment Analysis Example

from kogpt2_transformers import get_kogpt2_tokenizer

from transformers import GPT2LMHeadModel

import torch

tokenizer = get_kogpt2_tokenizer()

model = GPT2LMHeadModel.from_pretrained('skt/kogpt2-base-v2')

# Reviews for sentiment analysis

reviews = [

"This movie was really fun",

"The service was terrible",

"Great product for the price"

]

for review in reviews:

# Prompt engineering for positive/negative judgment

prompt = f"{review} This review is"

input_ids = tokenizer.encode(prompt, return_tensors='pt')

with torch.no_grad():

output = model.generate(

input_ids,

max_length=len(input_ids[0]) + 10,

num_return_sequences=1,

temperature=0.7

)

result = tokenizer.decode(output[0])

print(f"Original: {review}")

print(f"Analysis: {result}\n")

Chatbot Building Example

from kogpt2_transformers import get_kogpt2_tokenizer

from transformers import GPT2LMHeadModel

import torch

tokenizer = get_kogpt2_tokenizer()

model = GPT2LMHeadModel.from_pretrained('skt/kogpt2-base-v2')

def generate_response(user_input, context=""):

"""Generate conversation-based response"""

prompt = f"{context}\nUser: {user_input}\nAI:"

input_ids = tokenizer.encode(prompt, return_tensors='pt')

with torch.no_grad():

output = model.generate(

input_ids,

max_length=input_ids.shape[1] + 50,

temperature=0.8,

top_k=50,

top_p=0.95,

repetition_penalty=1.2,

do_sample=True

)

response = tokenizer.decode(output[0], skip_special_tokens=True)

# Extract only the AI response part

ai_response = response.split("AI:")[-1].strip()

return ai_response

# Chatbot conversation example

context = ""

while True:

user_input = input("You: ")

if user_input.lower() in ['quit', 'exit']:

break

response = generate_response(user_input, context)

print(f"AI: {response}\n")

# Update context

context += f"User: {user_input}\nAI: {response}\n"

Model Specifications

- Architecture: GPT-2

- Parameters: 125M

- Vocabulary Size: 50,000

- Context Length: 1,024 tokens

- Training Data: Korean web documents, news, Wikipedia

Performance Benchmarks

| Task | Dataset | KoGPT2 Score |

|---|---|---|

| Text generation quality | Human evaluation | 4.2/5.0 |

| Sentence completion | Self-evaluation | 85% |

| Conversation naturalness | Self-evaluation | 78% |

Related Projects

Resources

- GitHub: https://github.com/SKT-AI/KoGPT2

- Hugging Face: skt/kogpt2-base-v2

- Tutorials: GitHub Examples

- Issues: GitHub Issues

License

CC-BY-NC-ND 4.0 - Non-commercial use, modification and redistribution allowed

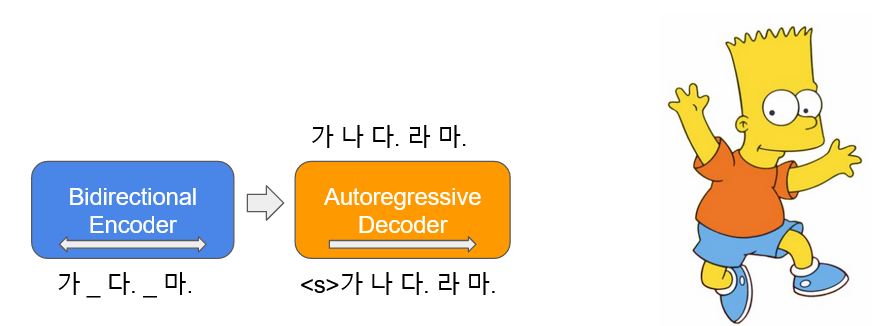

4 - KoBART

KoBART is a BART (Bidirectional and Auto-Regressive Transformers) model specialized in Korean text generation and summarization. Utilizing an Encoder-Decoder architecture, it demonstrates excellent performance across various natural language generation tasks.

Project Information

- Developer: SK Telecom (SKT-AI)

- License: CC-BY-NC-SA 4.0

- GitHub: https://github.com/SKT-AI/KoBART

Key Features

1. Encoder-Decoder Architecture

- Bidirectional encoder and auto-regressive decoder

- Optimized for text generation and transformation tasks

- Balance between context understanding and generation

2. Main Application Areas

- Text summarization: Condensing long documents into concise summaries

- Sentence generation: Producing natural Korean language sentences

- Translation: Sentence transformation and paraphrasing

- Dialogue generation: Question-answering systems

3. Korean Language Optimization

- Pre-trained on Korean corpus

- Considers Korean grammar and word order

- Supports diverse Korean language domains

Installation and Usage

Installation

pip install transformers torch

Basic Text Summarization

from transformers import PreTrainedTokenizerFast, BartForConditionalGeneration

# Load model and tokenizer

tokenizer = PreTrainedTokenizerFast.from_pretrained('gogamza/kobart-base-v2')

model = BartForConditionalGeneration.from_pretrained('gogamza/kobart-base-v2')

# Summarize long text

text = """

SK Telecom is Korea's leading mobile telecommunications company with

extensive ICT technology including AI, 5G, and cloud services. Recently,

it developed the Korean large language model A.X and released it as

open source, contributing to the development of the domestic AI ecosystem.

"""

# Encode and generate summary

inputs = tokenizer(text, return_tensors='pt', max_length=1024, truncation=True)

summary_ids = model.generate(

inputs['input_ids'],

max_length=150,

num_beams=5,

early_stopping=True

)

# Decode

summary = tokenizer.decode(summary_ids[0], skip_special_tokens=True)

print(summary)

Text Generation Example

# Prompt-based text generation

prompt = "With the advancement of artificial intelligence"

inputs = tokenizer(prompt, return_tensors='pt')

outputs = model.generate(

inputs['input_ids'],

max_length=100,

temperature=0.8,

do_sample=True,

top_k=50

)

generated_text = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(generated_text)

Model Specifications

- Architecture: BART

- Parameters: 123M

- Vocabulary Size: 30,000

- Max Sequence Length: 1,024

- Encoder Layers: 6

- Decoder Layers: 6

Fine-tuning Guide

from transformers import Trainer, TrainingArguments

# Fine-tuning configuration

training_args = TrainingArguments(

output_dir='./kobart-finetuned',

num_train_epochs=3,

per_device_train_batch_size=8,

per_device_eval_batch_size=8,

warmup_steps=500,

weight_decay=0.01,

logging_dir='./logs',

evaluation_strategy="epoch"

)

# Create Trainer

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset

)

# Start training

trainer.train()

Resources

- GitHub: https://github.com/SKT-AI/KoBART

- Hugging Face: gogamza/kobart-base-v2

5 - onot

onot is a compliance tool that automatically generates open source license notices based on SPDX (Software Package Data Exchange) documents. It was jointly developed by SK Telecom and Kakao and released as open source.

Project Information

- Developer: SK Telecom & Kakao (Joint Development)

- License: Apache License 2.0

- GitHub: https://github.com/sktelecom/onot

Key Features

1. SPDX-based Automation

- SPDX 2.3 standard support

- Support for JSON, RDF, YAML, Tag-Value formats

- Automatic parsing and validation

2. Multiple Output Formats

- HTML license notices

- Markdown license notices

- Excel format

- Custom template support

3. Compliance Support

- Automatic organization of license obligations

- Copyright information aggregation

- Indication of source code availability

- Automatic determination of notice requirements

Installation and Usage

Installation

# Install from PyPI

pip install onot

# Or install from source

git clone https://github.com/sktelecom/onot.git

cd onot

pip install -e .

Basic Usage

# Generate HTML license notice from SPDX file

onot -i sbom.spdx.json -o notice.html

# Generate in Markdown format

onot -i sbom.spdx.json -o notice.md -f markdown

# Generate in Excel format

onot -i sbom.spdx.json -o notice.xlsx -f excel

SPDX Document Example

{

"spdxVersion": "SPDX-2.3",

"dataLicense": "CC0-1.0",

"SPDXID": "SPDXRef-DOCUMENT",

"name": "MyProject",

"packages": [

{

"SPDXID": "SPDXRef-Package-1",

"name": "express",

"versionInfo": "4.18.2",

"licenseConcluded": "MIT",

"copyrightText": "Copyright (c) 2009-2014 TJ Holowaychuk",

"downloadLocation": "https://registry.npmjs.org/express/-/express-4.18.2.tgz"

}

]

}

License

Apache License 2.0 - Commercial use allowed

Resources

- GitHub: https://github.com/sktelecom/onot

- Issues: GitHub Issues

6 - SKT Passkey

SKT Passkey is a passwordless authentication solution based on the WebAuthn (FIDO2) standard. It provides safe and convenient login experience using biometric recognition or device PIN, and can be integrated with SK Telecom’s Passkey Platform to build enterprise-grade reliable authentication systems.

Project Information

- Developer: SK Telecom Passkey Team

- License: Apache License 2.0

- GitHub Organization: https://github.com/skt-passkey

- Main Repository:

- passkey-rp-sample - Relying Party sample application

What is Passkey?

Passkey is a safe and convenient authentication method that replaces traditional passwords:

- Passwordless: No need to remember or manage passwords

- Secure: Uses cryptographic authentication with device-bound credentials

- Convenient: Biometric or device PIN-based authentication

- Phishing-resistant: Resistant to phishing and credential theft attacks

- Interoperable: Works across different platforms and devices

Advantages of SKT Passkey Platform

1. Enterprise-Grade Reliability

- Large-scale deployment validation

- 24/7 stable service

- Utilizing SK Telecom’s infrastructure

2. Easy Integration

- RESTful API provided

- Developer-friendly SDK

- Comprehensive documentation and sample code

- OAuth2-based authentication

3. Standards Compliance

- W3C WebAuthn standard

- FIDO2 authentication

- Open standard support

4. Multi-platform Support

- Web browsers (Chrome, Safari, Edge, etc.)

- iOS applications

- Android applications

- Cross-device authentication

Architecture

Key Components

Authenticator: Device that performs authentication

- Built-in authenticators (fingerprint, face recognition)

- External security keys

- Platform-specific authenticators

Relying Party (RP): Your application that uses Passkey

- Communicates with the Passkey platform

- Verifies authentication responses

- Manages user credentials

Passkey Platform: SK Telecom’s authentication service

- Handles registration and authentication flows

- Manages credential lifecycle

- Provides API and SDKs

Use Cases

Consumer Services

- Financial services and banking

- E-commerce and retail

- Content streaming platforms

- Social media and messaging

Enterprise Applications

- Single Sign-On (SSO)

- VPN and remote access

- Internal applications

- Workforce identity management

Mobile Applications

- In-app authentication

- Biometric-based login

- Secure transaction verification

Integration Flow

1. User Registration

├─ Generate credential pair (public/private key)

├─ Store public key in server

└─ Store private key in device

2. Authentication

├─ User initiates login

├─ Server sends challenge

├─ Device signs challenge with private key

├─ Server verifies signature with public key

└─ User authenticated

Security Features

Credential Security

- Private keys never leave the user’s device

- Cryptographically bound to specific devices

- Protected by device security mechanisms (TPM, Secure Enclave)

Attack Resistance

- Phishing-resistant: Server verification prevents phishing

- Replay-attack resistant: Challenge-response mechanism

- Credential theft resistant: Biometric/PIN protection

User Privacy

- No shared secrets across accounts

- Server never sees biometric data

- Privacy-preserving authentication

Resources

Official Documentation

Related Standards and Documentation

- W3C WebAuthn: https://www.w3.org/TR/webauthn/

- FIDO Alliance: https://fidoalliance.org/

- Passkey: https://www.passkey-sktelecom.com/

License

Apache License 2.0 - Commercial use allowed